By not quite working properly, the AI tool spits out something genuinely new

In recent weeks, we have seen two major developments in public discourse surrounding AI. The first was that story about the Google engineer declaring that LaMDA, the deep learning conversation bot he was working on, had acquired sentience. That of course was nonsense. Even if the tool does display some very sophisticated abilities to understand language in context, all this really shows is that a machine might be able to apply some limited elements of a theory of mind, despite actually lacking one itself. I’d say that software engineers should cure themselves of the urge to declare machines sentient by simply reading some effing Heidegger (the ‘being’ of Dasein is ‘care’, do I have to spell it out?), although I worry their take-home might end up being the Nazi stuff as opposed to the profound philosophical insight.

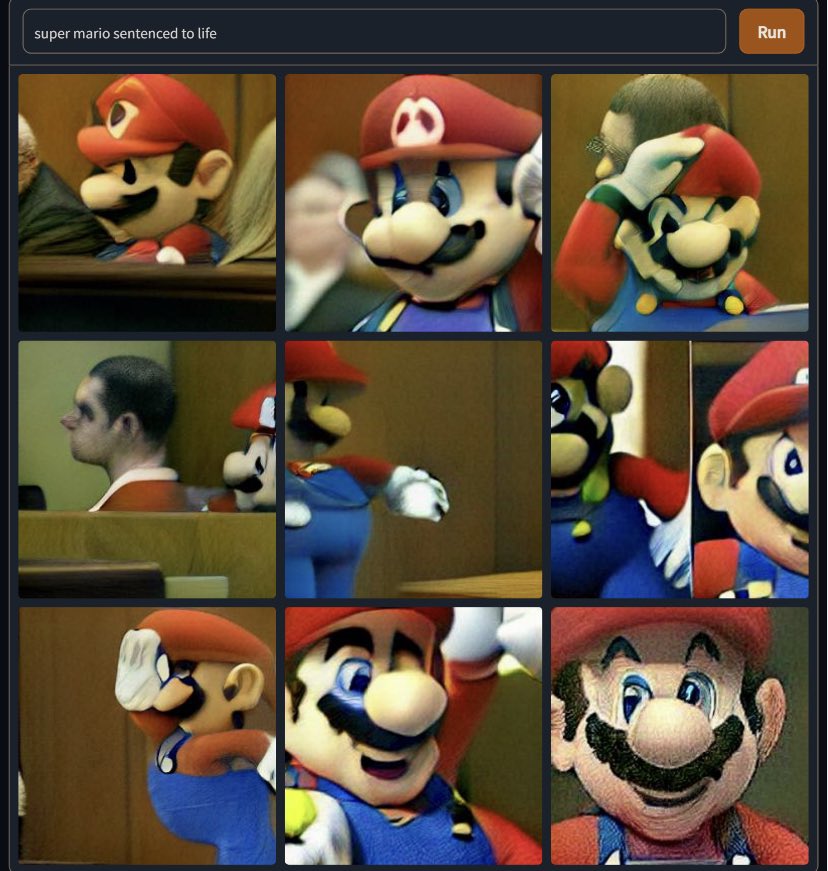

The other, hopefully more momentous development, was that people started using DALL-E Mini. The tool, which was launched by open source AI company Hugging Face almost a year ago, suddenly caught viral fire, as the general online public appeared to develop quite the taste for prompting an image generation tool to show it what it would look like if Super Mario was getting a life sentence, or Mr Blobby was in the Bayeux Tapestry, or George Costanza was holding an AK-47.

DALL-E Mini is not the only tool to generate images in this way – I have already written for ArtReview, for instance, about Wombo, another app which works in a pretty similar way (although it tends to want you to generate an image that conforms to one of its pre-set ‘styles’). But one thing we might say about DALL-E Mini is that it is a masterpiece of presentation. DALL-E images typically get shared as screenshots, with the prompt at the top captioning the nine images, usually blurred at distorted, perhaps senseless on their own, below. Instantly, almost no matter what has been produced, this is shareable content.

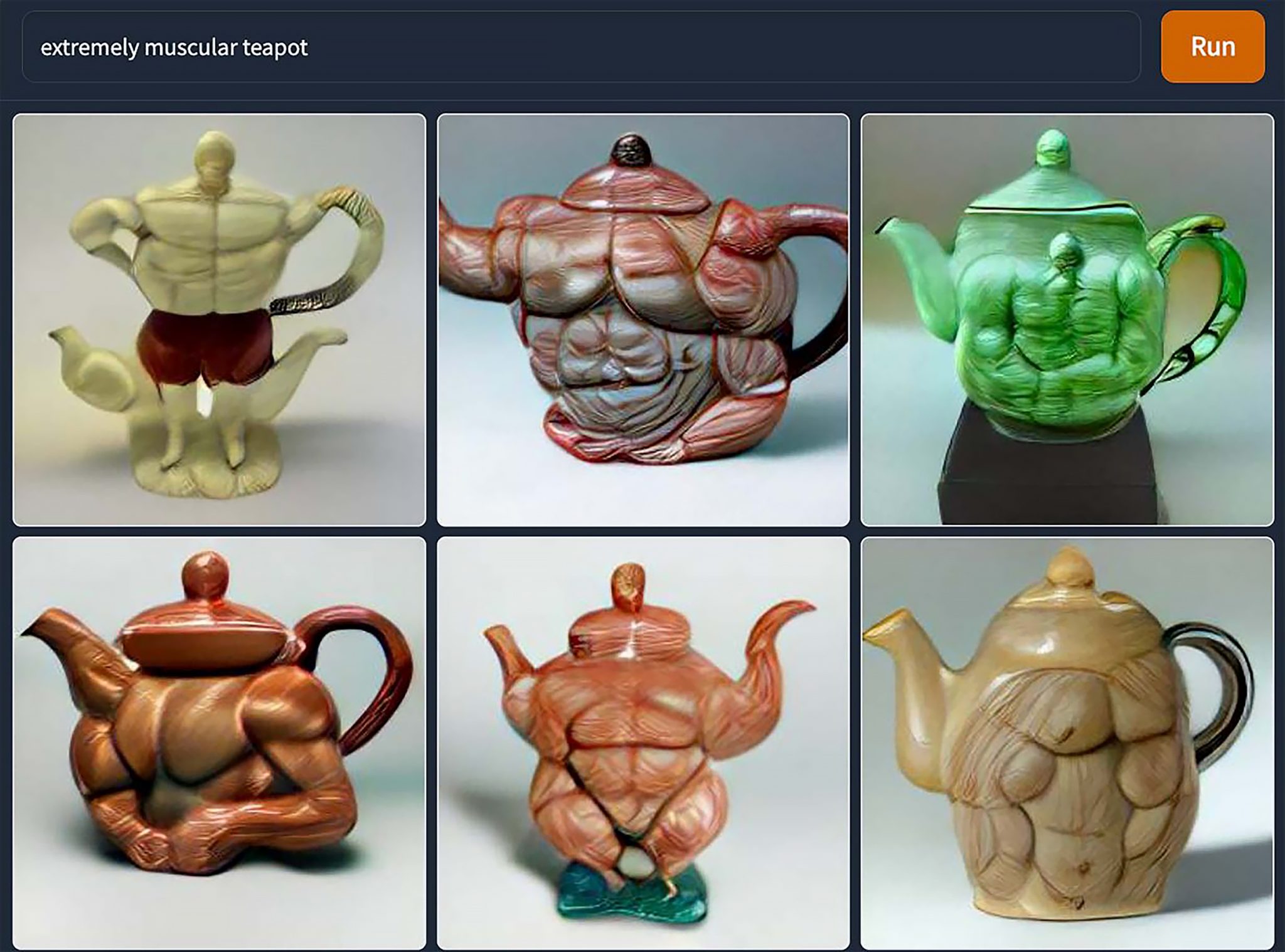

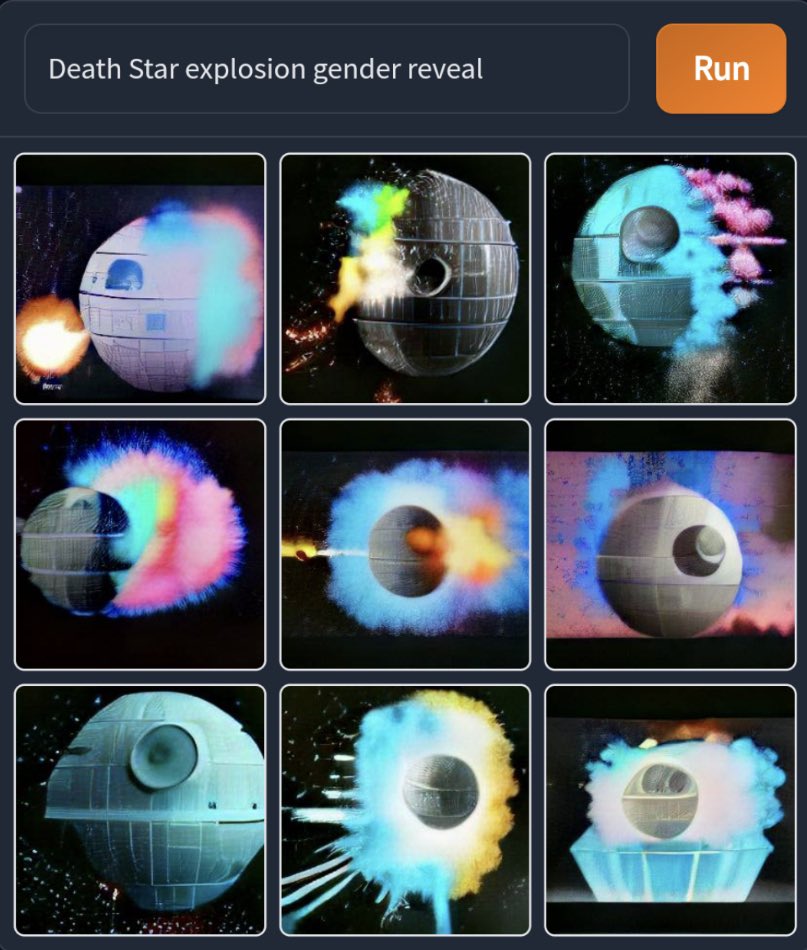

Another thing we might say about DALL-E Mini is that at its best, it trades in a kind of online grotesque. What I mean by this is that the images DALL-E Mini generates are ‘grotesque’ in the original sense, in that they depict bizarre fusions – hybrids like the human-animal-plant figures found in the ruins of Nero’s unfinished palace, the Domus Aurea. DALL-E Mini images show us things that would not otherwise be: muscular teapots, ‘gaming urinals’, or ‘Death Star gender reveals’.

Perhaps the key to the tool’s appeal is that it cannot really do faces. DALL-E Mini gives us the outline of familiar figures, it gives us their vague general sense. But the faces remain distorted, for the most part a strange, lost blur: the AI not powerful enough to reproduce them properly. This marks out what the tool is giving us as a fantasy: characters appear as they do when we imagine them, not quite complete.

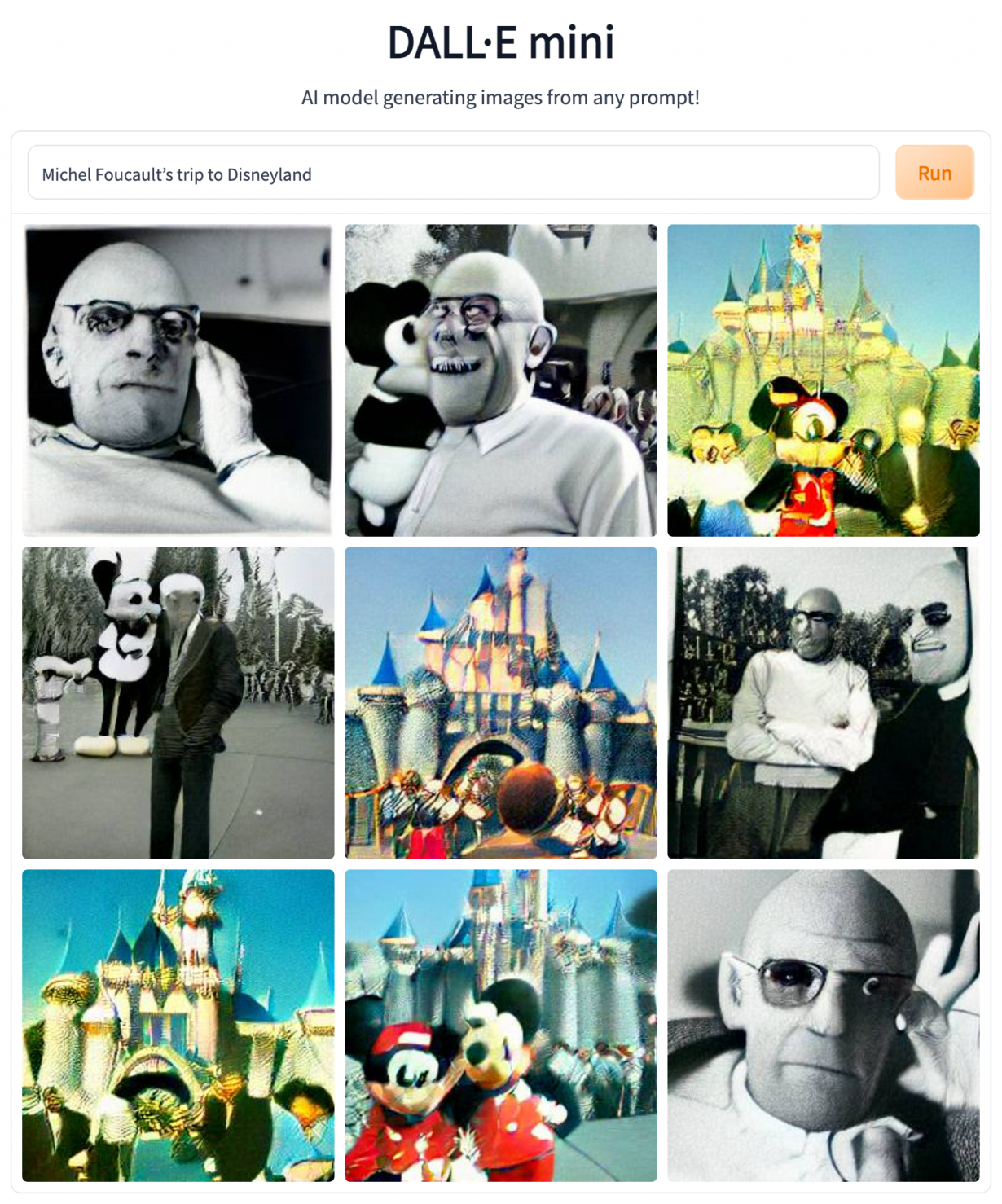

Interestingly, DALL-E Mini is apparently just the most publicly-accessible version of a more sophisticated tool which can produce things much closer to photo-realistic representations of reality. This, we might think, is more dangerous – given familiar fears about ‘deepfakes’ gradually shunting out things that have ‘really’ occurred from reality. But it would also, I’m sure, be nothing like as fun to play with. At the user end, DALL-E Mini is fun to use precisely because it doesn’t always work especially well: it surprises us, in the ways in which it realises our prompts. I might type in ‘Michel Foucault’s trip to Disneyland’, for instance, and be stunned by how strangely poignant it all looks, by the way that everything looks like an old photo of a fondly-remembered family vacation, by how he genuinely seems to be having a great time. Making images on DALL-E Mini is a bit like making music on a crappy old MIDI keyboard. Nothing really sounds like the names of the presets imply they ought to – but, precisely in failing to meet its own concept, there lies the instrument’s distinctive sound, its appeal.

As with any art, DALL-E Mini involves a kind of making-objective of imagination. Something is imagined, and an object is made of it (and of course that need not imply any 1:1 mapping of how something is imagined to how it is realised – when we make art ourselves, it can still surprise us). But whereas historically we have only had private access to our own imaginations, tools like DALL-E Mini allow us to mine what we think of as the internet’s objective imagination: the data-set of images on which it has been trained. We see how our prompts might be realised, against some great aggregate of what mental images the general public might call to mind, if we screamed at them: ‘The Gruffalo on Love Island,’ or ‘Karl Marx eating a Greggs sausage roll’.

In this of course, DALL-E Mini is subject to the same problem any deep-learning tool is: it can only give us back what we have already fed in. If society is biased in some given way, to give ‘doctor’ as male for instance, and ‘nurse’ as female, then so too will the AI tool be. AI tools reproduce the ever-same: Google’s LaMDA, for instance, would make a great improv partner, ‘yes-anding’ whatever its user happens to input to it. Usually the thought here is that one day they will completely lock us inside their code: AI, social media algorithms, the blockchain and its unhackable record. Tech, the future, will see us completely dominated by the past.

But despite this, despite its programming, DALL-E Mini can still spit out something genuinely new. Again, it is in not quite working properly that what it produces can have the status of ‘art’. Using DALL-E Mini, we can receive something novel, because what the machine outputs falls short of the data it has been fed on. In collaboration with a human user, DALL-E Mini can process the internet’s collective mind into that of an artist.

Perhaps this is the clue for how, in future, AI tools could continue to be used by people to ‘make art’, as opposed to just convincing fakes. We usually think that such tools would be ‘more sophisticated’ if they were better able to approximate to reality. But actually, I think, we should hope that they end up getting a whole lot weirder: that we get more and different AI image tools not to obsolete DALL-E Mini, but to exhibit different quirks. Only then can AI tools be used to produce something new; only then can they preserve, despite their programming, the autonomy of art.